The Absurdity of the Open Letter Calling for a Pause on AI Development

While well-intentioned, the proposal signed by Elon Musk and 1,187 others is fundamentally flawed.

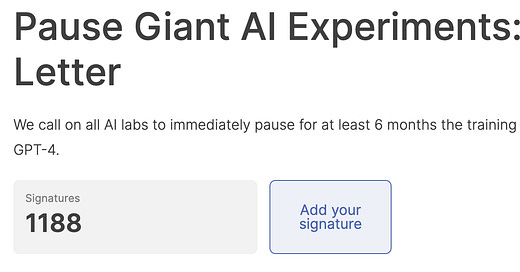

Yesterday, 1,188 people — including researchers, tech critics, a few of my closest friends, and for some reason Elon Musk (will get to that in a minute) — released an open letter to “Pause Giant AI Experiments”. Specifically, the letter calls for a 6-month pause on training any AI system more powerful than GPT-4 — the groundbreaking AI technology that powers Open AI’s ChatGPT Pro.

While I believe many (but not all) of the people behind this letter had good intentions when they signed it, I feel the letter stokes more fear than solutions and their approach to guiding AI into the future is fundamentally flawed, in my opinion.

Their concern, in summary, is that AI development is starting to get out of control and that, “Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable.” To be clear, they are not calling for the end of AI development, but a “public and verifiable” pause by all key actors to build AI that is, “more accurate, safe, interpretable, transparent, robust, aligned, trustworthy, and loyal.”

Some of the signees include Elon Musk, Andrew Yang (2020 presidential candidate), Steve Wozniak, the CEO of Stability AI and co-founders from Pinterest and Ripple, along with a large field of research scientists at companies like DeepMind (owned by Google).

While I believe the letter and its authors to be well-intentioned, I feel its approach is fundamentally flawed and doesn’t propose a plausible strategy for guiding safe and ethical AI. Instead, this letter is an adversarial provocation designed to capture media attention. When you take even a cursory look under the surface, the problems become abundant and apparent:

This letter, while written to the entire AI industry, is clearly targeting only one company leader in this space, Open AI, whose GPT-4 model is directly cited by the letter as the most power an AI should be allowed to achieve for now. All other AI models therefore would be exempt from this pause, as they are not as “powerful” as GPT-4.

Speaking of which, how do you objectively measure how “powerful” an AI model is? What constitutes a model from being close to GPT-4? What metrics do you use? This letter has NONE of that information. I don’t even think there is an objective way to measure it.

The most prominent name on the letter, Elon Musk, is working on his own Open AI/ChatGPT competitor, according to The Information. Forcing his chief rival to pause their work benefits Elon‘s ambitions to build a competitor. His motivations for signing this letter are suspect and must be looked upon with great reservation.

Elon isn’t the only competitor who signed this letter. Researchers at Google and Deepmind — a division of Google — have signed this letter as well. Google is a direct competitor to Open AI and would benefit from a pause, as their public-facing Bard chatbot doesn’t compare to Open AI’s ChatGPT. Several people from other competitors of Open AI have signed this letter as well.

The idea that there is any legal or societal way to pause all AI development across-the-board globally is, in my opinion, absurd. How do you enforce an AI ban? Who gets to enforce it? How do you determine their powers? Who determines which companies and their AI models are or are not advanced enough? This is just the beginning of the rabbit hole of unanswered questions. This letter lacks ANY of the details that would make its proposal even mildly plausible.

And how do you enforce a ban globally? Why would nations like China ever honor an AI ban, when it would give them a chance to catch up to the U.S. on AI development?

In my opinion, the approach this open letter proposes is misguided and will lead to more problems than solutions. The vast majority of those who have signed this letter are well-intentioned, but have not thought the logistics or ramifications of their proposal through. This letter stokes more fear than solutions — which is potentially the point of the open letter. (It is certainly generating PR.) Fear always captures attention, even if that fear is somewhat manufactured.

We’ve been through this before with CRISPR, the incredible gene editing technology revolutionizing biology and genetics. Once CRISPR was invented, scientists found ways to use it to advance cures for cancer and other diseases. And while a few abused the technology, researchers and world governments came together and found ways to regulate the technology without calling for a complete ban on its development.

I agree that we need to build more ethical guardrails into AI. I have written extensively about building a potential AI Code of Ethics here on my newsletter and spoken with some of the world’s top ethicists (many of which have not signed this open letter to date). But calling for an immediate pause of AI development — primarily targeted at one company — isn’t a plausible or effective way to do it.

Instead, we need to convene leaders from AI, business, academia, government and art to have an unfiltered discussion about the best path forward. This is something I am actively working on now with some key leaders in AI, business, and Congress. I know there are other AI, policy and academic leaders who are doing the same.

AI has the power to transform our world, both for the better and for the worse. But technology has always, on a long enough time scale, improved the human condition. Instead of proposing an impossible solution and stoking fear, we should guide AI’s development through collaboration, transparency, practical solutions, and most of all, hope.

More to come.

~ Ben

P.S. I will be speaking about the Open Letter tonight at the Generative AI Social in San Francisco.

I appreciate your clear-headedness, and I do agree that the signees behind the "Open Letter" might have less altruistic reasons than what they want to portray. That said, the sheer power of this new technological tool threatens to pose such a direct shock on our society as it is currently structured that it is unclear if we can cope with such a shock. That is not taking into account the probability that a human agent uses the power of AI for nefarious reasons and manages to inflict incalculable damage on our society, or that AI itself eventually is able to inflict damage without the need for human guidance. Being on the side of Luddites is rarely a winning proposition, but in this particular case, the Luddites may have a point.